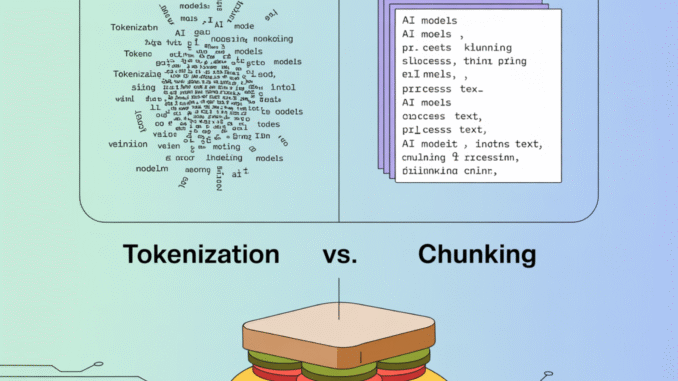

Chunking vs. Tokenization: Key Differences in AI Text Processing

Introduction When you’re working with AI and natural language processing, you’ll quickly encounter two fundamental concepts that often get confused: tokenization and chunking. While both involve breaking down text into smaller pieces, they serve completely […]